In the fall semester 2019/20, several groups of master students of the Engineering and Management Department (09) of the Munich University of Applied Sciences were faced with the task to plan new assembly lines and factory sections, with weighing up different alternatives for action. Part of the task set by teacher Prof. Dr. Spitznagel was to select a proper software as a planning tool. Consequently, several tasks of evaluation had to be solved at the same time. A cost-utility analysis immediately seemed appropriate.

The students gave us an insight into their work and the achieved results and told us about their experience, for which we are very grateful.

The Engineering and Management Department of the Munich University of Applied Sciences is one of the most renowned talent pools for industrial engineers. Close contacts with corporate practice have positive effects on the application-oriented study. The students can practice entrepreneurial behavior in various projects and acquire essential key competencies.

https://www.hm.edu/

We introduced distance learning in one of the previous blog contributions by taking the example for evaluation of layout variants in factory planning. We would like to take this opportunity to extend the blog series about cost-utility analysis with this application example.

Approach for selecting the appropriate software

The approach for selecting the appropriate software for cost-utility analysis is generally the same as for any comparison of variants.

- Definition of the evaluation criteria (targets)

- Weighting of the evaluation criteria

- Determination of the costs (efforts)

- Calculation of the utility values

- Selection of the objectively best solution

The prerequisite for the evaluation of alternatives is, however, the existence of the latter. This might sound trivial, but finding a proper basic choice of possible planning tools is often quite difficult in practice. Because searching must be conducted in a structured manner if you want to find the right solution.

Step 1: Defining evaluation criteria

For this reason, the students initially fixed the evaluation criteria relevant for them. This helps not to lose the focus when searching. After all, online researches are popular, and the providers know how to use the technical possibilities. For example, they like to draw the attention to unique features of their own product. Features that only touch on the original demand profile instead of meeting it fully can easily pass into the consciousness of the searcher.

The students were also aware of this, especially because they used, in particular the websites of the providers as sources for searching for state-of-the-art software. But also reviews and talks with experienced factory planners yielded appropriate input. Konstantin Frank, speaker of one of the seven student groups, reflects the experience of his group as follows:

“We had to avoid overlooking important criteria. We found assistance in the literature. For example, Schenk, Wirth and Müller listed numerous criteria that could be used for assessment of factory planning tools int heir “Factory Planning Manual” (Heidelberg: Springer, 2014). We discussed in the team which criteria are most relevant for our concrete planning project.”

The results are represented in the following list:

- Hardware requirements

- Adaptability

- Interfaces

- Service

- Introduction

- User friendliness

- Maintenance and updates

- Costs

It was important at this point for Mr. Frank to emphasize that everybody in the team has the same understanding of the individual criteria. For example, it had to be clarified whether royalty-bearing updates are part of the “Costs” criterion or part of “Maintenance and updates”.

It is expedient to specify a measuring system for the found criteria already in this relatively early phase. This is, however, only required in step 3 of determination of the partial utility values, but it already now fosters the common understanding for the aforementioned criteria. An expedient means is the degrees of performance. It describes how close an implementation variant is to the target value of a criterion. Furthermore, degrees of performance can be applied both to ordinally and to cardinally scaling feature variants.

Step 2: Weighting of the evaluation criteria

The weighting of evaluation criteria is associated with most misassessments regarding the objectivity of the results of a cost-utility analysis. The perceptions in practice range from “casting dices” to “Queen trumps jack”. Indeed, this step can be managed well in terms of methodology. The pairwise (or paired) comparison is mentioned here as an example. We already explained the first procedure here in the blog.

The fact that this perception nevertheless occurs is not seldom to be attributed to errors the users experienced in the cost-utility analysis. In particular, the mixing of evaluation and weighting should be mentioned here. This is also the reason why the evaluation criteria should always be weighted and documented in a separate step, also if e.g. Excel encourages users to create integrated spreadsheets.

Objective of the weighting is to take into account the degrees of performance to be determined in step 3 for the individual evaluation criteria in the overall result in accordance with their significance for those who perform the assessment. Mr. Frank took the following example to explain this aspect:

“It was our objective to create expedient planning concepts within as short a time as possible and with an introduction phase as short as possible. Correspondingly, we weighted user friendliness substantially higher than costs, for example.”

Other organizations pursue other objectives. Therefore, the weighting factors found by the students cannot be applied directly. Nevertheless, certain tendencies can be identified. For example, none of the criteria is dominant which speaks in favor of a certain balance amongst the criteria found.

| evaluation criteria | weighting % |

| Hardware requirements | 5% |

| Adaptability | 10% |

| Interfaces | 15% |

| Service | 15% |

| Introduction | 10% |

| User friendliness | 25% |

| Maintenance and updates | 10% |

| Costs | 10% |

Furthermore, the relatively heavily weighted criterion “User friendliness” is in all probability also of higher importance for other groups than service or introduction, for example. Because this criterion involves the desire of many users for self-explaining software. When it comes to the topic of ‘Factory planning’, in particular, you will not be working with the system on a daily basis. There are often many days or sometimes even weeks between the individual activities of operation in which primarily other systems are used. It is all the more annoying if you must always try to understand the system anew since it cannot be understood intuitively. Even the most comprehensive training will not help here because routine is not developed, and imparted knowledge cannot be consolidated – it is lost.

Step 3: Determination of the costs (efforts)

The next task was to evaluate the alternatives found for the factory planning software. Some authors in the field of cost-utility analysis call this step “determination of the partial utility values”. Other writers prefer the effort method and associate low efforts with low costs and thus better target compliance.

Which evaluation perspective you select depends on the use case. It is, however, important that close-to-target implementation of the features is also reflected in a high partial use value. If you assign the degrees of performance from 1 to 5, for example, to an evaluation criterion, then you will usually assign the point value 5 for very low efforts/costs or full target compliance.

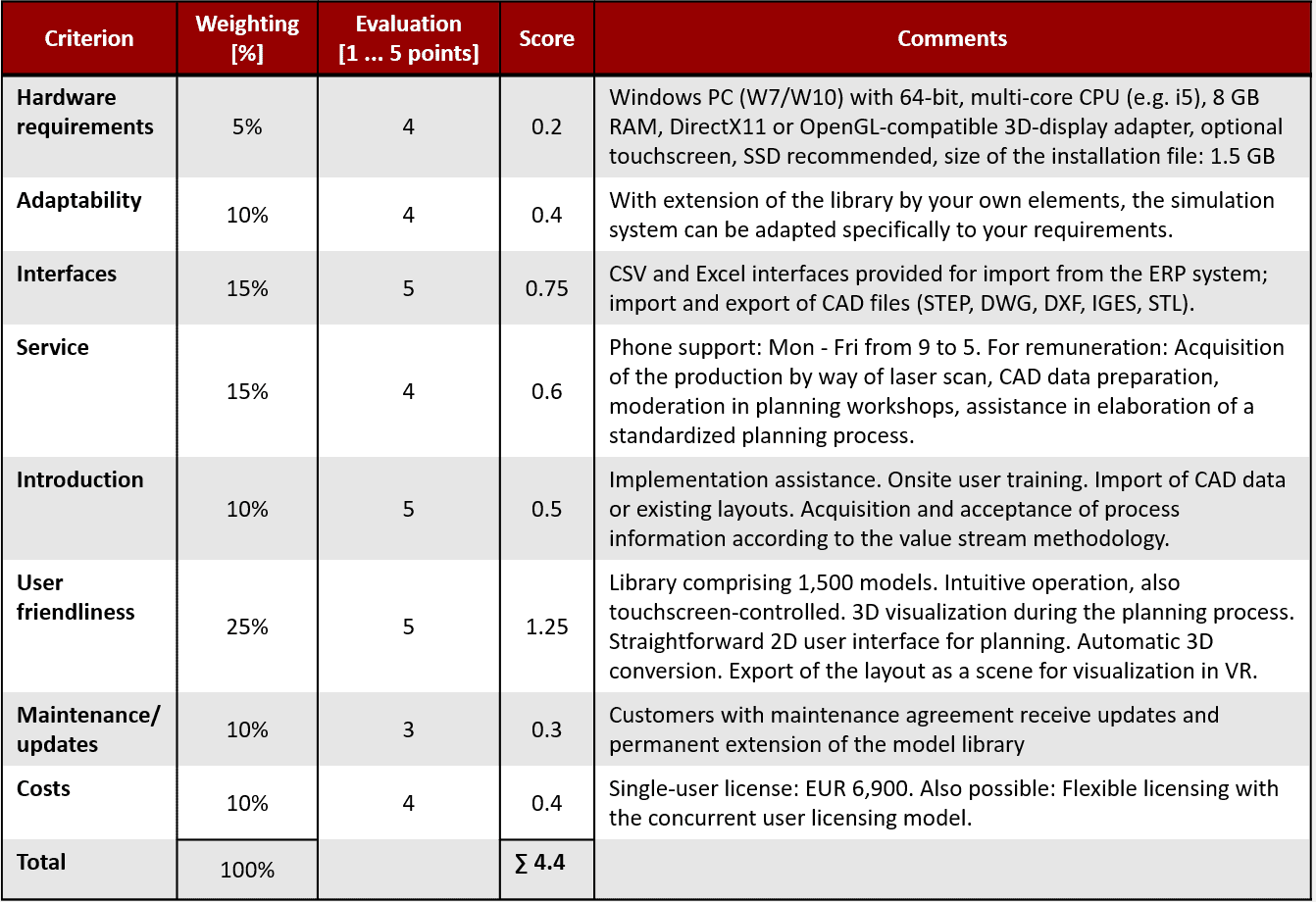

This is demonstrated by way of the following example – the evaluation scheme of the students group of Mr. Frank in fall 2019 for the visTABLE® software:

Degrees of performance from 1 to 5 were selected. For example, 4 from 5 possible points were awarded to the criterion “Hardware requirements” in an ordinal evaluation. This means that there is a common idea of the required hardware for these persons who associate it with even lower costs or possibly better availability within the group. visTABLE® meets this idea not completely, but substantially better than the average of the assessed planning systems.

(source: Frank, Konstantin)

This points system also provides the possibility to specify nonperformance or a lower limit for embodiment of a feature as a knockout criterion. If an alternative for an evaluation criterion does not reach the specified knockout threshold, the complete alternative need not be considered any longer. Mr. Frank explains it taking the following example:

“This was the case, for example, if a planning software required special hardware beyond the performance of a standard office computer.”

It is logical that this means can only be used for evaluation criteria that can reliably be identified or assessed. Therefore, the students drew special attention to obtain as much information as possible about the factory planning software. In particular,

- the websites of the appropriate providers,

- telephone calls with the appropriate sales and marketing department,

- reviews, and also

- trial licenses, and

- interviews with experienced factory planners were used as sources for assessment.

Step 4: Calculation of the utility values

The calculation of the utility values is a formal arithmetic operation. The Excel template from our previous contribution on the topic of cost-utility analysis will certainly help save some efforts.

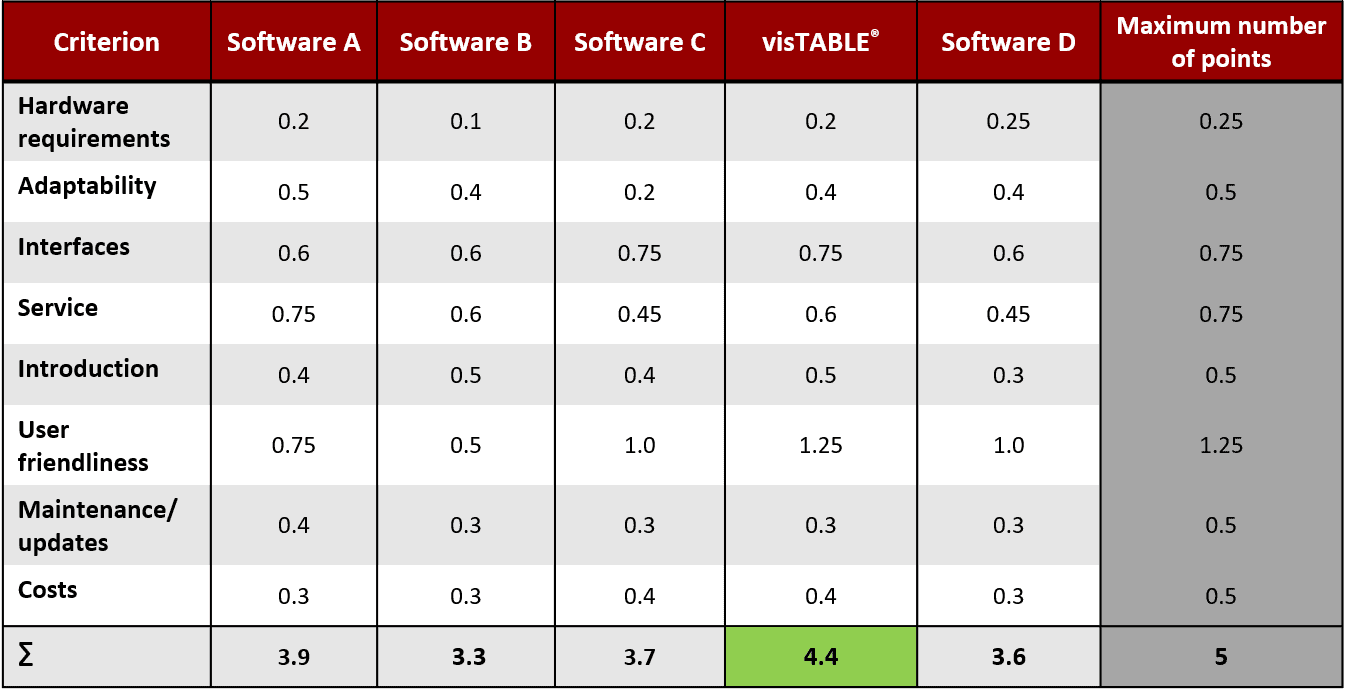

The students used their own table to compare five planning tools. The last line comprises the utility values. The greatest utility value for the workgroup of Mr. Frank was reached by visTABLE® with 4.4 from 5 possible points. Tools with less utility values were called neutral.

The output of the maximum possible number of points for the partial utility values in the last column is an interesting feature. These points are determined for each evaluation criterion from the product of weighting factor and maximum degree of performance. The criterion “User friendliness”, for example, can never reach more than 1.25 points (i.e. 25% from 5) with a weight of 25% and the maximum number of points of 5 which was used here. This illustrates vividly how far the individual alternatives for the appropriate evaluation criteria are actually away from the target.

Step 5: Selection of the objectively best solution

At the end, you will have to take a decision for a software for factory planning. The students decided not to take the easy way out. To remove final doubts in the result of the cost-utility analysis, they decided to compare the software on the second place directly to the software that took the first place. Mr. Frank explains the framework conditions:

“Nevertheless you should also consider the second-place selection more in detail even though the distance to place 1 is only little. For this reason, we compared software A and visTABLE® directly to one another once more. In conclusion, we decided in favor of visTABLE® because of the essentially more intuitive operation.”

If providers offer test versions with full scope of functions and without greater obstacles, a direct comparison is easily done. The cost-utility analysis helps also here: Because the aspects to be observed are already contained in the evaluation criteria. A validation of the degrees of performance assessed in step 3 should provide final certainty.

Who finally gives the provider the opportunity to answer questions regarding concrete use cases which result from the test will be able,

- on the one hand, to evaluate the service and,

- on the other hand, the expertise.

One more tip here: Sufficient resources (time, personnel) should be reserved for the test, and you should focus on the essentials (defined use cases). No provider will see repeatedly postponed or extended test periods, for example, as an indication for serious interest.

Conclusion

After completion of the student project and being aware of what was achieved, Mr. Frank drew the following conclusions in respect of the efforts for the researches and the cost-utility analysis itself:

“This is really a matter of putting in the effort, but it’s worth it. Because the selection of the proper planning software is essential for success of the project. […] We were able to achieve our planning goals as desired thanks to the software. The effort for the preceding cost-utility analysis definitely paid off.”